Truth be told that the word ‘truth’ or defining something as being ‘true’ is bound to be false or inaccurate. As described in one of my earlier posts, [Doubt may be unpleasant, but certainty is absurd] we find that we can never be certain.

However, there are some discrete anthropocentric models or concepts that might be regarded as ‘true’. Yet these are nothing more than agreements between humans, and sometimes very arbitrary ones like what makes a centimeter or a meter, or when we call something an heap and when a grain of sand [See also: Solving the Sorites Paradox]. We can be certain about a meter, but to say that this is the truth misses the point. Truth is often used to explain that there is some objective reality we can relate to, and that therefore, something is either true or not. What is wrong with the word ‘truth’ is that is simultaneously claims to be objective and certain. Certainty only resides in our heads and is thus subjective. Knowledge about everything that takes places outside of our heads will only be an approximation and never cross the boundary of being highly likely. For all we know, we are just a brain in a vat.[1] It is as the philosopher Voltaire once famously said: doubt is an unpleasant state of the mind, but certainty is an absurd one.

I will argue that the word ‘truth’ is absolute and we ought to use terminology that speaks of likelihoods because our (scientific) knowledge of the world is not certain or absolute. Another reason why truth is a bad choice of words is that it is so easily misunderstood and misused. It becomes a problem when someone who thinks he or she is right or correct claims to have the truth, often without supporting the statement with evidence. Religions are a good example of entire hoards of people claiming to have access to ‘the Truth’. But it is also being used in less obvious manners. There is no better example of this than the so called social media app Donald Trump created, called ‘Truth Social’. Naming some app or statement ‘truth’ does not automatically make it so, yet it possibly will have such an effect on some of its users and readers.

One could argue that replacing the word truth with a likelihood leads to the same results, but I disagree. First of all, likelihoods inherently accept fallibility, meaning you might still be wrong. This is important because it creates room for discussion and possibly finding agreement, something our society is desperately in need of. Second, likelihoods come in degrees and urges the user or listener to think about what degree of likelihood is applicable. Truth is absolute, it is either the true or not. Determining a likelihood requires a closer look at the evidence and possible errors. Moreover, it makes people better at forecasting and making predictions.

In an intelligence tournament, organized by the Research Organization of the Federation of American Intelligence Agencies, the researchers Tetlock and Mellers were able to identify some super forecasters, that performed much better than on average on making accurate predictions. These superforecasters were: pragmatic experts who drew on many analytical tools, with the choice of tool hinging on the particular problem they faced. These experts gathered as much information as they could. When thinking, they often shifted mental gears, sprinkling their speech with transition markers such as “however”, “but”, “Although” and “on the other hand”. They talked about possibilities, probabilities, not certainties. And while no one likes to say, “I was wrong”, these experts more readily admitted it and changed their minds. … Moreover, super forecasters are Bayesian.[2]

From probabilities and likelihoods to predictions.

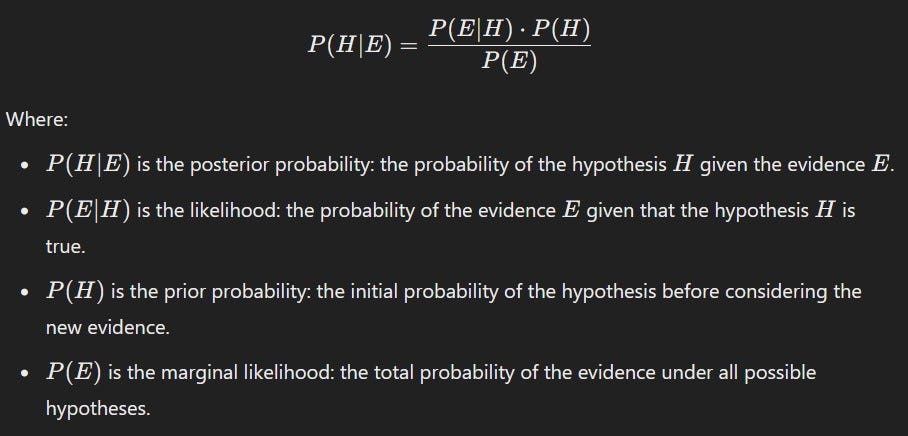

What is meant with probabilities or likelihoods? I am not talking about classical theoretical probabilities that assume all outcomes are equally likely, as in that there can be an outcome between 0 and 1. Mathematical equations for example can give you ‘certainty’. I am talking about empirical probability (ie. observations and experiments) and subjective probabilities which are based upon personal judgements. What is in our interest here is what is known as Bayes’ Theorem. This theorem is a mathematical formula used to update the probability of a hypothesis based on new evidence, also known as corroboration. It provides a way to revise existing predictions or theories by incorporating new information. Bayes' Theorem allows for a systematic and quantitative update of probabilities, reflecting how new evidence affects the likelihood (P) of a hypothesis (H) being correct. The theorem is expressed as:

What we normally do when trying to make predictions (ie. hypothesis) is extrapolating the past and current observations, in other words by using induction. However, when you recall our example of Russels’ Chicken [See: Induction and Deduction: two sides of the same coin?] we concluded that induction (on its own) is false. If the chicken were to make a prediction (H) of the behavior of the farmer for tomorrow or the day after, it will likely make a prediction like ‘there is a high probability (P)’ or ‘it is very likely’, ‘that the farmer will feed me tomorrow’. This is the evidence (E) that is being collected day after day. Although we know that the explanation of why the farmer feeds the chicken is false, namely that the farmer is just really fond of the chicken, the overall probability will still remain high. There is only one occurrence when the prediction fails but for the rest it is always accurate. Now take in account the following quote of Deutsch when explaining how difficult it is to make observations of the natural world: “Most of what happens is too fast or too slow, too big or too small, or too remote, or hidden by opaque barriers, or operates on principles too different from anything that influenced our evolution.”[3] What if we are making predictions based upon observations of something that just happens too slow for us human beings, like for example the many evolutionary changes our world has known? We might be the naive chicken that is still mistaken about the intentions of the farmer, we’re just still months away from Thanksgiving and have never witnessed it yet. In such a case, all your predictions will be correct, or very likely.

Nassim Nicolas Taleb’s also uses this same example, albeit using a Turkey instead of a chicken, in order to argue that induction is false and that you cannot use it to make effective predictions. The event where the farmer against all odds kills the Turkey or Chicken is what Taleb describes as a ‘Black Swan’ event. A Black Swan is an event that is highly unpredictable and improbable but has a large impact. He goes on to describe that we often haven’t encountered the black swan yet, and thus do not know how to predict or anticipate it. [5] To conclude this point, we might be making very good predictions but nonetheless be wrong about the explanation as in the example of Russels’ chicken. A high probability does not equal a good explanation. In other words, a high probability does not necessarily mean that you are closer to the truth.

But what about corroboration, does this not help us get closer to truth? Corroboration is when evidence supports an hypothesis and thereby strengthens the credibility of the hypothesis. This means that there is already evidence verifying the hypothesis, but all evidence adding to this verification can be seen as corroboration. This corroboration is only valuable when there has not yet been a single refutation of the hypothesis, since this would eliminate all the corroboration no matter how much there is of it. It is impossible to know how much corroboration (evidence) is necessary and most importantly, if we are missing the crucial evidence that the entire hypothesis is false because we are for example misinterpreting the cause and effect. How much corroborating evidence do you need to classify an hypothesis as probable and when as likely being correct? What if I have not looked at the right spot for corroborating evidence? Perhaps I have only searched for evidence that corroborates my own hypothesis, and not that of competitors. In that case, I would surely establish that my hypothesis is better corroborated by available evidence.

Let’s say we have two competing[6] hypothesis, and one has substantially more corroboration than the other, which one would you choose as being closer to the truth? Intuitively I would choose the hypothesis that is supported by more and better evidence. However, this still does not necessarily mean it is therefore more correct. This becomes more apparent when the evidence for one hypothesis only slightly outweighs the evidence for the competing hypothesis. There are fine tools like the Analysis of Competing Hypothesis (ACH) that can weigh each hypothesis with its consistency or inconsistency with the available evidence, but even these tools are full of subjective criteria.[7]

Explanations.

Instead of trying to increase the amount of corroboration for a specific hypothesis, while of course at the same time trying to falsify it, we should try to modify the hypothesis itself by finding a better explanation. Take for example Russels’ chicken again and its two competing hypotheses, one being that the farmer is fond of the chicken, and the other that the farmer wants the chicken to become fat and juicy in order to have more nutritional value in the end. We can measure the amount of evidence we find when observing the behaviour of the farmer and use it to corroborate or falsify one of the hypothesis. But as said earlier in relation to making predictions, what if the ‘butcher event’ has not occurred yet? We would then conclude on the basis of corroboration that the hypothesis of the kind farmer is ‘likely’ correct. Now instead of corroborating the hypothesis by seeking evidence, we take a step back and analyze the bigger picture and look for elements of the explanation that are too easy to vary. For example, we take into consideration that the farmer has approximately 5000 other chickens as well. Why would the farmer make all this effort and spend this much money to feed them all? Is he really fond of all the chickens? Why did he put them in these small cages and not out in the fields? We can ask many more questions of this sort, but what we will eventually conclude is that the variable ‘feeding’ is not such a good explanation for the good behavior of the farmer at all because there are too many different explanations equally possible. Instead of sticking to the original explanation, we continue to enhance it by trying to explain other elements. We would then for example start investigating the rest of the building and find that the farmer also has a large assortment of sharp knifes nearby or perhaps some very efficient small transportation boxes specifically designed to transport as much chickens as possible. Then there is this truck that always comes and takes them, with large letters on the side displaying ‘The best Butcher in town’ and ‘Chicken is delicious’. We would then go on and try to find an explanation for those elements too and so on.

In order to enhance your original explanation, as in taking a step back to analyze the bigger picture, you need observations (induction). It is a fallacy that you can get there by only using rationality (deduction) [See also: Induction and Deduction: two sides of the same coin?] . What you do need to have is the willingness to potentially abandon your original explanation in search for a better one. Instead of counting the number of times the farmer feeds his chicken, you start wondering around the farm, looking for other clues that might have nothing to do with feeding at all. To summarize the difference between corroboration and an explanation: you do not outweigh the other explanation by having more corroborating evidence, you out-argue the other explanation by proposing new arguments that are harder to vary.[8] When we have decided that we have a better explanation, we can start searching for corroborating evidence for it, or even better, evidence that falsifies the entire explanation.

The real question is this: when do you decide that a new explanation is required? We often first need to be triggered by some error or anomaly in order to abondon our earlier explanations. Geocentrism, the idea that everything revolves around Earth, was only abondend when Galileo found a more compelling explanation for the phases of Venus, which geocetrism could not explain. In other words, even if we are pretty sure about some things in this world, we should always remain curious, skeptical and keep looking around the farm for clues, for perhaps our explanations were wrong after all. If this is the world we live in, who then needs words like ‘truth’?

[1] This refers to the philosophical thought experiment by Harman and Putnam and inspired movies like the Matrix.

[2] P 368, 369, Steven Pinker, Enlightenment Now: the Case for Reason, Science, Humanism, and Progress

[3] David Deutsch, The beginning of Infinity, P37. Deutsch makes this quote while explaining that we hardly ever can make observations unaided, but this quote is also very applicable here, but in another context.

[4] Intelligent Design uses this as an argument against evolution.

[5] Nassim Nicholas Taleb, The Black Swan: The Impact of the Highly Improbable

[6] Competing: meaning they are mutually exclusively. Evidence for one hypothesis cannot also be evidence for the other hypothesis.

[7] Created by PARC. Subjective because for example, you can decide if something is inconsistent or very inconsistent. But when does something become very inconsistent, instead of merely inconsistent?

[8] But how to classify a ‘better’ explanation? Should we still continue to use the language of probabilities, or should we stick with ‘better’ or ‘worse’?

Bibliography:

- Nassim Nicholas Taleb, The Black Swan: The Impact of the Highly Improbable, (Londen: Penguin Group, 2007)

- David Deutsch. The Beginning of Infinity: Explanations that Transform the World (London: Pengiun Books, 2012)

- Steven Pinker, Enlightenment Now: the Case for Reason, Science, Humanism, and Progress, (New York: Penguin Random House, 2018)

Picture: created by AI (Bing)

I presented the mathematical model summing up this view that likelihoods (i.e. their ratios) should replace true belief in "Belief and the Incremental Confirmation of One Hypothesis Relative to Another" (PSA 1978 Volume One).